This manuscript (permalink) was automatically generated from greenelab/deep-review@44ff95a on December 28, 2021.

Brock C. Christensen2.1⚄,

Anthony Gitter2.2,2.3⚄,†,

Daniel S. Himmelstein2.4⚄,

Alexander J. Titus2.1⚄,

Joshua J. Levy2.5⚄,

Casey S. Greene2.6,2.7⚄,†,

Daniel C. Elton2.8⚄,

The Version 1.0 Deep Review Authors

⚄ — Author order for version 2.0 is currently randomized with each new build.

† — To whom correspondence should be addressed: gitter@biostat.wisc.edu (A.G.) and greenescientist@gmail.com (C.S.G.)

2.1. Department of Epidemiology, Geisel School of Medicine, Dartmouth College, Lebanon, NH

2.2. Department of Biostatistics and Medical Informatics, University of Wisconsin-Madison, Madison, WI

2.3. Morgridge Institute for Research, Madison, WI

2.4. Department of Systems Pharmacology and Translational Therapeutics, University of Pennsylvania, Philadelphia, Pennsylvania, United States of America

2.5. Program in Quantitative Biomedical Sciences, Geisel School of Medicine at Dartmouth, Lebanon, NH

2.6. Department of Systems Pharmacology and Translational Therapeutics, Perelman School of Medicine, University of Pennsylvania, Philadelphia, PA

2.7. Childhood Cancer Data Lab, Alex’s Lemonade Stand Foundation, Philadelphia, PA

2.8. Radiology and Imaging Sciences, National Institutes of Health Clinical Center, Bethesda, MD

Travers Ching1.1,☯,

Daniel S. Himmelstein1.2,

Brett K. Beaulieu-Jones1.3,

Alexandr A. Kalinin1.4,

Brian T. Do1.5,

Gregory P. Way1.2,

Enrico Ferrero1.8,

Paul-Michael Agapow1.9,

Michael Zietz1.2,

Michael M. Hoffman1.10,1.11,1.12,

Wei Xie1.13,

Gail L. Rosen1.14,

Benjamin J. Lengerich1.15,

Johnny Israeli1.16,

Jack Lanchantin1.17,

Stephen Woloszynek1.14,

Anne E. Carpenter1.18,

Avanti Shrikumar1.19,

Jinbo Xu1.20,

Evan M. Cofer1.21,1.22,

Christopher A. Lavender1.23,

Srinivas C. Turaga1.24,

Amr M. Alexandari1.19,

Zhiyong Lu1.25,

David J. Harris1.26,

Dave DeCaprio1.27,

Yanjun Qi1.17,

Anshul Kundaje1.19,1.28,

Yifan Peng1.25,

Laura K. Wiley1.29,

Marwin H.S. Segler1.30,

Simina M. Boca1.31,

S. Joshua Swamidass1.32,

Austin Huang1.33,

Anthony Gitter1.34,1.35,†,

Casey S. Greene1.2,†

☯ — Author order was determined with a randomized algorithm

† — To whom correspondence should be addressed: gitter@biostat.wisc.edu (A.G.) and greenescientist@gmail.com (C.S.G.)

1.1. Molecular Biosciences and Bioengineering Graduate Program, University of Hawaii at Manoa, Honolulu, HI

1.2. Department of Systems Pharmacology and Translational Therapeutics, Perelman School of Medicine, University of Pennsylvania, Philadelphia, PA

1.3. Genomics and Computational Biology Graduate Group, Perelman School of Medicine, University of Pennsylvania, Philadelphia, PA

1.4. Department of Computational Medicine and Bioinformatics, University of Michigan Medical School, Ann Arbor, MI

1.5. Harvard Medical School, Boston, MA

1.6. Program in Quantitative Biomedical Sciences, Geisel School of Medicine at Dartmouth, Lebanon, NH

1.7. Department of Epidemiology, Geisel School of Medicine, Dartmouth College, Lebanon, NH

1.8. Computational Biology and Stats, Target Sciences, GlaxoSmithKline, Stevenage, United Kingdom

1.9. Data Science Institute, Imperial College London, London, United Kingdom

1.10. Princess Margaret Cancer Centre, Toronto, ON, Canada

1.11. Department of Medical Biophysics, University of Toronto, Toronto, ON, Canada

1.12. Department of Computer Science, University of Toronto, Toronto, ON, Canada

1.13. Electrical Engineering and Computer Science, Vanderbilt University, Nashville, TN

1.14. Ecological and Evolutionary Signal-processing and Informatics Laboratory, Department of Electrical and Computer Engineering, Drexel University, Philadelphia, PA

1.15. Computational Biology Department, School of Computer Science, Carnegie Mellon University, Pittsburgh, PA

1.16. Biophysics Program, Stanford University, Stanford, CA

1.17. Department of Computer Science, University of Virginia, Charlottesville, VA

1.18. Imaging Platform, Broad Institute of Harvard and MIT, Cambridge, MA

1.19. Department of Computer Science, Stanford University, Stanford, CA

1.20. Toyota Technological Institute at Chicago, Chicago, IL

1.21. Department of Computer Science, Trinity University, San Antonio, TX

1.22. Lewis-Sigler Institute for Integrative Genomics, Princeton University, Princeton, NJ

1.23. Integrative Bioinformatics, National Institute of Environmental Health Sciences, National Institutes of Health, Research Triangle Park, NC

1.24. Howard Hughes Medical Institute, Janelia Research Campus, Ashburn, VA

1.25. National Center for Biotechnology Information and National Library of Medicine, National Institutes of Health, Bethesda, MD

1.26. Department of Wildlife Ecology and Conservation, University of Florida, Gainesville, FL

1.27. ClosedLoop.ai, Austin, TX

1.28. Department of Genetics, Stanford University, Stanford, CA

1.29. Division of Biomedical Informatics and Personalized Medicine, University of Colorado School of Medicine, Aurora, CO

1.30. Institute of Organic Chemistry, Westfälische Wilhelms-Universität Münster, Münster, Germany

1.31. Innovation Center for Biomedical Informatics, Georgetown University Medical Center, Washington, DC

1.32. Department of Pathology and Immunology, Washington University in Saint Louis, Saint Louis, MO

1.33. Department of Medicine, Brown University, Providence, RI

1.34. Department of Biostatistics and Medical Informatics, University of Wisconsin-Madison, Madison, WI

1.35. Morgridge Institute for Research, Madison, WI

Deep learning describes a class of machine learning algorithms that are capable of combining raw inputs into layers of intermediate features. These algorithms have recently shown impressive results across a variety of domains. Biology and medicine are data-rich disciplines, but the data are complex and often ill-understood. Hence, deep learning techniques may be particularly well-suited to solve problems of these fields. We examine applications of deep learning to a variety of biomedical problems—patient classification, fundamental biological processes, and treatment of patients—and discuss whether deep learning will be able to transform these tasks or if the biomedical sphere poses unique challenges. Following from an extensive literature review, we find that deep learning has yet to revolutionize biomedicine or definitively resolve any of the most pressing challenges in the field, but promising advances have been made on the prior state of the art. Even though improvements over previous baselines have been modest in general, the recent progress indicates that deep learning methods will provide valuable means for speeding up or aiding human investigation. Though progress has been made linking a specific neural network’s prediction to input features, understanding how users should interpret these models to make testable hypotheses about the system under study remains an open challenge. Furthermore, the limited amount of labeled data for training presents problems in some domains, as do legal and privacy constraints on work with sensitive health records. Nonetheless, we foresee deep learning enabling changes at both bench and bedside with the potential to transform several areas of biology and medicine.

Biology and medicine are rapidly becoming data-intensive. A recent comparison of genomics with social media, online videos, and other data-intensive disciplines suggests that genomics alone will equal or surpass other fields in data generation and analysis within the next decade [1]. The volume and complexity of these data present new opportunities, but also pose new challenges. Automated algorithms that extract meaningful patterns could lead to actionable knowledge and change how we develop treatments, categorize patients, or study diseases, all within privacy-critical environments.

The term deep learning has come to refer to a collection of new techniques that, together, have demonstrated breakthrough gains over existing best-in-class machine learning algorithms across several fields. For example, over the past five years these methods have revolutionized image classification and speech recognition due to their flexibility and high accuracy [2]. More recently, deep learning algorithms have shown promise in fields as diverse as high-energy physics [3], computational chemistry [4], dermatology [5], and translation among written languages [6]. Across fields, “off-the-shelf” implementations of these algorithms have produced comparable or higher accuracy than previous best-in-class methods that required years of extensive customization, and specialized implementations are now being used at industrial scales.

Deep learning approaches grew from research on artificial neurons, which were first proposed in 1943 [7] as a model for how the neurons in a biological brain process information. The history of artificial neural networks—referred to as “neural networks” throughout this article—is interesting in its own right [8]. In neural networks, inputs are fed into the input layer, which feeds into one or more hidden layers, which eventually link to an output layer. A layer consists of a set of nodes, sometimes called “features” or “units,” which are connected via edges to the immediately earlier and the immediately deeper layers. In some special neural network architectures, nodes can connect to themselves with a delay. The nodes of the input layer generally consist of the variables being measured in the dataset of interest—for example, each node could represent the intensity value of a specific pixel in an image or the expression level of a gene in a specific transcriptomic experiment. The neural networks used for deep learning have multiple hidden layers. Each layer essentially performs feature construction for the layers before it. The training process used often allows layers deeper in the network to contribute to the refinement of earlier layers. For this reason, these algorithms can automatically engineer features that are suitable for many tasks and customize those features for one or more specific tasks.

Deep learning does many of the same things as more familiar machine learning approaches. In particular, deep learning approaches can be used both in supervised applications—where the goal is to accurately predict one or more labels or outcomes associated with each data point—in the place of regression approaches, as well as in unsupervised, or “exploratory” applications—where the goal is to summarize, explain, or identify interesting patterns in a data set—as a form of clustering. Deep learning methods may in fact combine both of these steps. When sufficient data are available and labeled, these methods construct features tuned to a specific problem and combine those features into a predictor. In fact, if the dataset is “labeled” with binary classes, a simple neural network with no hidden layers and no cycles between units is equivalent to logistic regression if the output layer is a sigmoid (logistic) function of the input layer. Similarly, for continuous outcomes, linear regression can be seen as a single-layer neural network. Thus, in some ways, supervised deep learning approaches can be seen as an extension of regression models that allow for greater flexibility and are especially well-suited for modeling non-linear relationships among the input features. Recently, hardware improvements and very large training datasets have allowed these deep learning techniques to surpass other machine learning algorithms for many problems. In a famous and early example, scientists from Google demonstrated that a neural network “discovered” that cats, faces, and pedestrians were important components of online videos [9] without being told to look for them. What if, more generally, deep learning takes advantage of the growth of data in biomedicine to tackle challenges in this field? Could these algorithms identify the “cats” hidden in our data—the patterns unknown to the researcher—and suggest ways to act on them? In this review, we examine deep learning’s application to biomedical science and discuss the unique challenges that biomedical data pose for deep learning methods.

Several important advances make the current surge of work done in this area possible. Easy-to-use software packages have brought the techniques of the field out of the specialist’s toolkit to a broad community of computational scientists. Additionally, new techniques for fast training have enabled their application to larger datasets [10]. Dropout of nodes, edges, and layers makes networks more robust, even when the number of parameters is very large. Finally, the larger datasets now available are also sufficient for fitting the many parameters that exist for deep neural networks. The convergence of these factors currently makes deep learning extremely adaptable and capable of addressing the nuanced differences of each domain to which it is applied.

This review discusses recent work in the biomedical domain, and most successful applications select neural network architectures that are well suited to the problem at hand. We sketch out a few simple example architectures in Figure 1. If data have a natural adjacency structure, a convolutional neural network (CNN) can take advantage of that structure by emphasizing local relationships, especially when convolutional layers are used in early layers of the neural network. Other neural network architectures such as autoencoders require no labels and are now regularly used for unsupervised tasks. In this review, we do not exhaustively discuss the different types of deep neural network architectures; an overview of the principal terms used herein is given in Table 1. Table 1 also provides select example applications, though in practice each neural network architecture has been broadly applied across multiple types of biomedical data. A recent book from Goodfellow et al. covers neural network architectures in detail [11], and LeCun et al. provide a more general introduction [2].

| Term | Definition | Example applications |

|---|---|---|

| Supervised learning | Machine-learning approaches with goal of prediction of labels or outcomes | |

| Unsupervised learning | Machine-learning approaches with goal of data summarization or pattern identification | |

| Neural network (NN) | Machine-learning approach inspired by biological neurons where inputs are fed into one or more layers, producing an output layer | |

| Deep neural network | NN with multiple hidden layers. Training happens over the network, and consequently such architectures allow for feature construction to occur alongside optimization of the overall training objective. | |

| Feed-forward neural network (FFNN) | NN that does not have cycles between nodes in the same layer | Most of the examples below are special cases of FFNNs, except recurrent neural networks. |

| Multi-layer perceptron (MLP) | Type of FFNN with at least one hidden layer where each deeper layer is a nonlinear function of each earlier layer | MLPs do not impose structure and are frequently used when there is no natural ordering of the inputs (e.g. as with gene expression measurements). |

| Convolutional neural network (CNN) | A NN with layers in which connectivity preserves local structure. If the data meet the underlying assumptions performance is often good, and such networks can require fewer examples to train effectively because they have fewer parameters and also provide improved efficiency. | CNNs are used for sequence data—such as DNA sequences—or grid data—such as medical and microscopy images. |

| Recurrent neural network (RNN) | A neural network with cycles between nodes within a hidden layer. | The RNN architecture is used for sequential data—such as clinical time series and text or genome sequences. |

| Long short-term memory (LSTM) neural network | This special type of RNN has features that enable models to capture longer-term dependencies. | LSTMs are gaining a substantial foothold in the analysis of natural language, and may become more widely applied to biological sequence data. |

| Autoencoder (AE) | A NN where the training objective is to minimize the error between the output layer and the input layer. Such neural networks are unsupervised and are often used for dimensionality reduction. | Autoencoders have been used for unsupervised analysis of gene expression data as well as data extracted from the electronic health record. |

| Variational autoencoder (VAE) | This special type of generative AE learns a probabilistic latent variable model. | VAEs have been shown to often produce meaningful reduced representations in the imaging domain, and some early publications have used VAEs to analyze gene expression data. |

| Denoising autoencoder (DA) | This special type of AE includes a step where noise is added to the input during the training process. The denoising step acts as smoothing and may allow for effective use on input data that is inherently noisy. | Like AEs, DAs have been used for unsupervised analysis of gene expression data as well as data extracted from the electronic health record. |

| Generative neural network | Neural networks that fall into this class can be used to generate data similar to input data. These models can be sampled to produce hypothetical examples. | A number of the unsupervised learning neural network architectures that are summarized here can be used in a generative fashion. |

| Restricted Boltzmann machine (RBM) | A generative NN that forms the building block for many deep learning approaches, having a single input layer and a single hidden layer, with no connections between the nodes within each layer | RBMs have been applied to combine multiple types of omic data (e.g. DNA methylation, mRNA expression, and miRNA expression). |

| Deep belief network (DBN) | Generative NN with several hidden layers, which can be obtained from combining multiple RBMs | DBNs can be used to predict new relationships in a drug-target interaction network. |

| Generative adversarial network (GAN) | A generative NN approach where two neural networks are trained. One neural network, the generator, is provided with a set of randomly generated inputs and tasked with generating samples. The second, the discriminator, is trained to differentiate real and generated samples. After the two neural networks are trained against each other, the resulting generator can be used to produce new examples. | GANs can synthesize new examples with the same statistical properties of datasets that contain individual-level records and are subject to sharing restrictions. They have also been applied to generate microscopy images. |

| Adversarial training | A process by which artificial training examples are maliciously designed to fool a NN and then input as training examples to make the resulting NN robust (no relation to GANs) | Adversarial training has been used in image analysis. |

| Data augmentation | A process by which transformations that do not affect relevant properties of the input data (e.g. arbitrary rotations of histopathology images) are applied to training examples to increase the size of the training set. | Data augmentation is widely used in the analysis of images because rotation transformations for biomedical images often do not change relevant properties of the image. |

While deep learning shows increased flexibility over other machine learning approaches, as seen in the remainder of this review, it requires large training sets in order to fit the hidden layers, as well as accurate labels for the supervised learning applications. For these reasons, deep learning has recently become popular in some areas of biology and medicine, while having lower adoption in other areas. At the same time, this highlights the potentially even larger role that it may play in future research, given the increases in data in all biomedical fields. It is also important to see it as a branch of machine learning and acknowledge that it has the same limitations as other approaches in that field. In particular, the results are still dependent on the underlying study design and the usual caveats of correlation versus causation still apply—a more precise answer is only better than a less precise one if it answers the correct question.

With this review, we ask the question: what is needed for deep learning to transform how we categorize, study, and treat individuals to maintain or restore health? We choose a high bar for “transform.” Andrew Grove, the former CEO of Intel, coined the term Strategic Inflection Point to refer to a change in technologies or environment that requires a business to be fundamentally reshaped [12]. Here, we seek to identify whether deep learning is an innovation that can induce a Strategic Inflection Point in the practice of biology or medicine.

There are already a number of reviews focused on applications of deep learning in biology [13,14,15,16,17], healthcare [18,19,20], and drug discovery [4,21,22,23]. Under our guiding question, we sought to highlight cases where deep learning enabled researchers to solve challenges that were previously considered infeasible or makes difficult, tedious analyses routine. We also identified approaches that researchers are using to sidestep challenges posed by biomedical data. We find that domain-specific considerations have greatly influenced how to best harness the power and flexibility of deep learning. Model interpretability is often critical. Understanding the patterns in data may be just as important as fitting the data. In addition, there are important and pressing questions about how to build networks that efficiently represent the underlying structure and logic of the data. Domain experts can play important roles in designing networks to represent data appropriately, encoding the most salient prior knowledge and assessing success or failure. There is also great potential to create deep learning systems that augment biologists and clinicians by prioritizing experiments or streamlining tasks that do not require expert judgment. We have divided the large range of topics into three broad classes: Disease and Patient Categorization, Fundamental Biological Study, and Treatment of Patients. Below, we briefly introduce the types of questions, approaches and data that are typical for each class in the application of deep learning.

A key challenge in biomedicine is the accurate classification of diseases and disease subtypes. In oncology, current “gold standard” approaches include histology, which requires interpretation by experts, or assessment of molecular markers such as cell surface receptors or gene expression. One example is the PAM50 approach to classifying breast cancer where the expression of 50 marker genes divides breast cancer patients into four subtypes. Substantial heterogeneity still remains within these four subtypes [24,25]. Given the increasing wealth of molecular data available, a more comprehensive subtyping seems possible. Several studies have used deep learning methods to better categorize breast cancer patients: For instance, denoising autoencoders, an unsupervised approach, can be used to cluster breast cancer patients [26], and CNNs can help count mitotic divisions, a feature that is highly correlated with disease outcome in histological images [27]. Despite these recent advances, a number of challenges exist in this area of research, most notably the integration of molecular and imaging data with other disparate types of data such as electronic health records (EHRs).

Deep learning can be applied to answer more fundamental biological questions; it is especially suited to leveraging large amounts of data from high-throughput “omics” studies. One classic biological problem where machine learning, and now deep learning, has been extensively applied is molecular target prediction. For example, deep recurrent neural networks (RNNs) have been used to predict gene targets of microRNAs [28], and CNNs have been applied to predict protein residue-residue contacts and secondary structure [29,30,31]. Other recent exciting applications of deep learning include recognition of functional genomic elements such as enhancers and promoters [32,33,34] and prediction of the deleterious effects of nucleotide polymorphisms [35].

Although the application of deep learning to patient treatment is just beginning, we expect new methods to recommend patient treatments, predict treatment outcomes, and guide the development of new therapies. One type of effort in this area aims to identify drug targets and interactions or predict drug response. Another uses deep learning on protein structures to predict drug interactions and drug bioactivity [36]. Drug repositioning using deep learning on transcriptomic data is another exciting area of research [37]. Restricted Boltzmann machines (RBMs) can be combined into deep belief networks (DBNs) to predict novel drug-target interactions and formulate drug repositioning hypotheses [38,39]. Finally, deep learning is also prioritizing chemicals in the early stages of drug discovery for new targets [23].

In healthcare, individuals are diagnosed with a disease or condition based on symptoms, the results of certain diagnostic tests, or other factors. Once diagnosed with a disease, an individual might be assigned a stage based on another set of human-defined rules. While these rules are refined over time, the process is evolutionary and ad hoc, potentially impeding the identification of underlying biological mechanisms and their corresponding treatment interventions.

Deep learning methods applied to a large corpus of patient phenotypes may provide a meaningful and more data-driven approach to patient categorization. For example, they may identify new shared mechanisms that would otherwise be obscured due to ad hoc historical definitions of disease. Perhaps deep neural networks, by reevaluating data without the context of our assumptions, can reveal novel classes of treatable conditions.

In spite of such optimism, the ability of deep learning models to indiscriminately extract predictive signals must also be assessed and operationalized with care. Imagine a deep neural network is provided with clinical test results gleaned from electronic health records. Because physicians may order certain tests based on their suspected diagnosis, a deep neural network may learn to “diagnose” patients simply based on the tests that are ordered. For some objective functions, such as predicting an International Classification of Diseases (ICD) code, this may offer good performance even though it does not provide insight into the underlying disease beyond physician activity. This challenge is not unique to deep learning approaches; however, it is important for practitioners to be aware of these challenges and the possibility in this domain of constructing highly predictive classifiers of questionable utility.

Our goal in this section is to assess the extent to which deep learning is already contributing to the discovery of novel categories. Where it is not, we focus on barriers to achieving these goals. We also highlight approaches that researchers are taking to address challenges within the field, particularly with regards to data availability and labeling.

Deep learning methods have transformed the analysis of natural images and video, and similar examples are beginning to emerge with medical images. Deep learning has been used to classify lesions and nodules; localize organs, regions, landmarks and lesions; segment organs, organ substructures and lesions; retrieve images based on content; generate and enhance images; and combine images with clinical reports [19,40].

Though there are many commonalities with the analysis of natural images, there are also key differences. In all cases that we examined, fewer than one million images were available for training, and datasets are often many orders of magnitude smaller than collections of natural images. Researchers have developed subtask-specific strategies to address this challenge.

Data augmentation provides an effective strategy for working with small training sets. The practice is exemplified by a series of papers that analyze images from mammographies [41,42,43,44,45]. To expand the number and diversity of images, researchers constructed adversarial [44] or augmented [45] examples. Adversarial training examples are constructed by selecting targeted small transformations to input data that cause a model to produce very different outputs. Augmented training applies perturbations to the input data that do not change the underlying meaning, such as rotations for pathology images. An alternative in the domain is to train towards human-created features before subsequent fine-tuning [42], which can help to sidestep this challenge though it does give up deep learning techniques’ strength as feature constructors.

A second strategy repurposes features extracted from natural images by deep learning models, such as ImageNet [46], for new purposes. Diagnosing diabetic retinopathy through color fundus images became an area of focus for deep learning researchers after a large labeled image set was made publicly available during a 2015 Kaggle competition [47]. Most participants trained neural networks from scratch [47,48,49], but Gulshan et al. [50] repurposed a 48-layer Inception-v3 deep architecture pre-trained on natural images and surpassed the state-of-the-art specificity and sensitivity. Such features were also repurposed to detect melanoma, the deadliest form of skin cancer, from dermoscopic [51,52] and non-dermoscopic images of skin lesions [5,53,54] as well as age-related macular degeneration [55]. Pre-training on natural images can enable very deep networks to succeed without overfitting. For the melanoma task, reported performance was competitive with or better than a board of certified dermatologists [5,51]. Reusing features from natural images is also an emerging approach for radiographic images, where datasets are often too small to train large deep neural networks without these techniques [56,57,58,59]. A deep CNN trained on natural images boosts performance in radiographic images [58]. However, the target task required either re-training the initial model from scratch with special pre-processing or fine-tuning of the whole network on radiographs with heavy data augmentation to avoid overfitting.

The technique of reusing features from a different task falls into the broader area of transfer learning (see Discussion). Though we’ve mentioned numerous successes for the transfer of natural image features to new tasks, we expect that a lower proportion of negative results have been published. The analysis of magnetic resonance images (MRIs) is also faced with the challenge of small training sets. In this domain, Amit et al. [60] investigated the tradeoff between pre-trained models from a different domain and a small CNN trained only with MRI images. In contrast with the other selected literature, they found a smaller network trained with data augmentation on a few hundred images from a few dozen patients can outperform a pre-trained out-of-domain classifier.

Another way of dealing with limited training data is to divide rich data—e.g. 3D images—into numerous reduced projections. Shin et al. [57] compared various deep network architectures, dataset characteristics, and training procedures for computer tomography-based (CT) abnormality detection. They concluded that networks as deep as 22 layers could be useful for 3D data, despite the limited size of training datasets. However, they noted that choice of architecture, parameter setting, and model fine-tuning needed is very problem- and dataset-specific. Moreover, this type of task often depends on both lesion localization and appearance, which poses challenges for CNN-based approaches. Straightforward attempts to capture useful information from full-size images in all three dimensions simultaneously via standard neural network architectures were computationally unfeasible. Instead, two-dimensional models were used to either process image slices individually (2D) or aggregate information from a number of 2D projections in the native space (2.5D).

Roth et al. compared 2D, 2.5D, and 3D CNNs on a number of tasks for computer-aided detection from CT scans and showed that 2.5D CNNs performed comparably well to 3D analogs, while requiring much less training time, especially on augmented training sets [61]. Another advantage of 2D and 2.5D networks is the wider availability of pre-trained models. However, reducing the dimensionality is not always helpful. Nie et al. [62] showed that multimodal, multi-channel 3D deep architecture was successful at learning high-level brain tumor appearance features jointly from MRI, functional MRI, and diffusion MRI images, outperforming single-modality or 2D models. Overall, the variety of modalities, properties and sizes of training sets, the dimensionality of input, and the importance of end goals in medical image analysis are provoking a development of specialized deep neural network architectures, training and validation protocols, and input representations that are not characteristic of widely-studied natural images.

Predictions from deep neural networks can be evaluated for use in workflows that also incorporate human experts. In a large dataset of mammography images, Kooi et al. [63] demonstrated that deep neural networks outperform a traditional computer-aided diagnosis system at low sensitivity and perform comparably at high sensitivity. They also compared network performance to certified screening radiologists on a patch level and found no significant difference between the network and the readers. However, using deep methods for clinical practice is challenged by the difficulty of assigning a level of confidence to each prediction. Leibig et al. [49] estimated the uncertainty of deep networks for diabetic retinopathy diagnosis by linking dropout networks with approximate Bayesian inference. Techniques that assign confidences to each prediction should aid physician-computer interactions and improve uptake by physicians.

Systems to aid in the analysis of histology slides are also promising use cases for deep learning [64]. Ciresan et al. [27] developed one of the earliest approaches for histology slides, winning the 2012 International Conference on Pattern Recognition’s Contest on Mitosis Detection while achieving human-competitive accuracy. In more recent work, Wang et al. [65] analyzed stained slides of lymph node slices to identify cancers. On this task a pathologist has about a 3% error rate. The pathologist did not produce any false positives, but did have a number of false negatives. The algorithm had about twice the error rate of a pathologist, but the errors were not strongly correlated. Combining pre-trained deep network architectures with multiple augmentation techniques enabled accurate detection of breast cancer from a very small set of histology images with less than 100 images per class [66]. In this area, these algorithms may be ready to be incorporated into existing tools to aid pathologists and reduce the false negative rate. Ensembles of deep learning and human experts may help overcome some of the challenges presented by data limitations.

One source of training examples with rich phenotypical annotations is the EHR. Billing information in the form of ICD codes are simple annotations but phenotypic algorithms can combine laboratory tests, medication prescriptions, and patient notes to generate more reliable phenotypes. Recently, Lee et al. [67] developed an approach to distinguish individuals with age-related macular degeneration from control individuals. They trained a deep neural network on approximately 100,000 images extracted from structured electronic health records, reaching greater than 93% accuracy. The authors used their test set to evaluate when to stop training. In other domains, this has resulted in a minimal change in the estimated accuracy [68], but we recommend the use of an independent test set whenever feasible.

Rich clinical information is stored in EHRs. However, manually annotating a large set requires experts and is time consuming. For chest X-ray studies, a radiologist usually spends a few minutes per example. Generating the number of examples needed for deep learning is infeasibly expensive. Instead, researchers may benefit from using text mining to generate annotations [69], even if those annotations are of modest accuracy. Wang et al. [70] proposed to build predictive deep neural network models through the use of images with weak labels. Such labels are automatically generated and not verified by humans, so they may be noisy or incomplete. In this case, they applied a series of natural language processing (NLP) techniques to the associated chest X-ray radiological reports. They first extracted all diseases mentioned in the reports using a state-of-the-art NLP tool, then applied a new method, NegBio [71], to filter negative and equivocal findings in the reports. Evaluation on four independent datasets demonstrated that NegBio is highly accurate for detecting negative and equivocal findings (~90% in F₁ score, which balances precision and recall [72]). The resulting dataset [73] consisted of 112,120 frontal-view chest X-ray images from 30,805 patients, and each image was associated with one or more text-mined (weakly-labeled) pathology categories (e.g. pneumonia and cardiomegaly) or “no finding” otherwise. Further, Wang et al. [70] used this dataset with a unified weakly-supervised multi-label image classification framework to detect common thoracic diseases. It showed superior performance over a benchmark using fully-labeled data.

Another example of semi-automated label generation for hand radiograph segmentation employed positive mining, an iterative procedure that combines manual labeling with automatic processing [74]. First, the initial training set was created by manually labeling 100 of 12,600 unlabeled radiographs that were used to train a model and predict labels for the rest of the dataset. Then, poor quality predictions were discarded through manual inspection, the initial training set was expanded with the acceptable segmentations, and the process was repeated. This procedure had to be repeated six times to obtain good quality segmentation labeling for all radiographs, except for 100 corner cases that still required manual annotation. These annotations allowed accurate segmentation of all hand images in the test set and boosted the final performance in radiograph classification [74].

With the exception of natural image-like problems (e.g. melanoma detection), biomedical imaging poses a number of challenges for deep learning. Datasets are typically small, annotations can be sparse, and images are often high-dimensional, multimodal, and multi-channel. Techniques like transfer learning, heavy dataset augmentation, and the use of multi-view and multi-stream architectures are more common than in the natural image domain. Furthermore, high model sensitivity and specificity can translate directly into clinical value. Thus, prediction evaluation, uncertainty estimation, and model interpretation methods are also of great importance in this domain (see Discussion). Finally, there is a need for better pathologist-computer interaction techniques that will allow combining the power of deep learning methods with human expertise and lead to better-informed decisions for patient treatment and care.

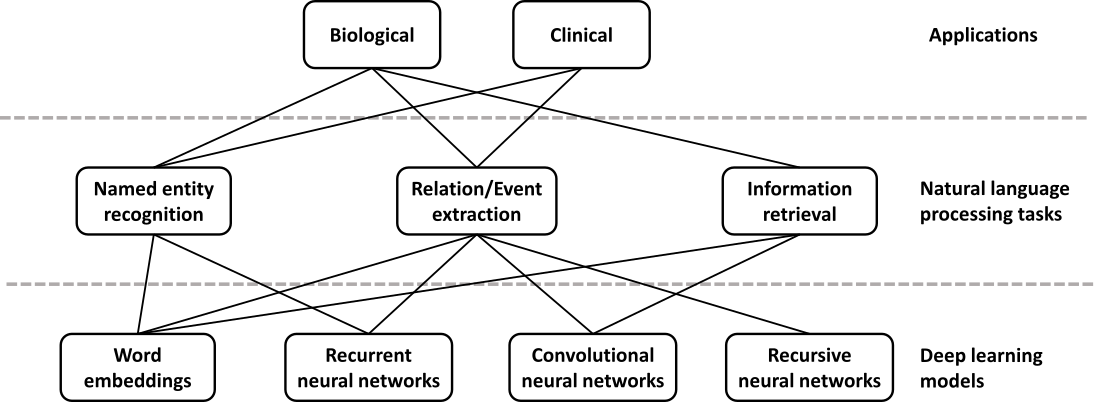

Due to the rapid growth of scholarly publications and EHRs, biomedical text mining has become increasingly important in recent years. The main tasks in biological and clinical text mining include, but are not limited to, named entity recognition, relation/event extraction, and information retrieval (Figure 2). Deep learning is appealing in this domain because of its competitive performance versus traditional methods and ability to overcome challenges in feature engineering. Relevant applications can be stratified by the application domain (biomedical literature vs. clinical notes) and the actual task (e.g. concept or relation extraction).

Named entity recognition (NER) is a task of identifying text spans that refer to a biological concept of a specific class, such as disease or chemical, in a controlled vocabulary or ontology. NER is often needed as a first step in many complex text mining systems. The current state-of-the-art methods typically reformulate the task as a sequence labeling problem and use conditional random fields [75,76,77]. In recent years, word embeddings that contain rich latent semantic information of words have been widely used to improve the NER performance. Liu et al. studied the effect of word embeddings on drug name recognition and compared them with traditional semantic features [78]. Tang et al. investigated word embeddings in gene, DNA, and cell line mention detection tasks [79]. Moreover, Wu et al. examined the use of neural word embeddings for clinical abbreviation disambiguation [80]. Liu et al. exploited task-oriented resources to learn word embeddings for clinical abbreviation expansion [81].

Relation extraction involves detecting and classifying semantic relationships between entities from the literature. At present, kernel methods or feature-based approaches are commonly applied [82,83,84]. Deep learning can relieve the feature sparsity and engineering problems. Some studies focused on jointly extracting biomedical entities and relations simultaneously [85,86], while others applied deep learning on relation classification given the relevant entities. For example, both multichannel dependency-based CNNs [87] and shortest path-based CNNs [88,89] are well-suited for sentence-based protein-protein extraction. Jiang et al. proposed a biomedical domain-specific word embedding model to reduce the manual labor of designing semantic representation for the same task [90]. Gu et al. employed a maximum entropy model and a CNN model for chemical-induced disease relation extraction at the inter- and intra-sentence level, respectively [91]. For drug-drug interactions, Zhao et al. used a CNN that employs word embeddings with the syntactic information of a sentence as well as features of part-of-speech tags and dependency trees [92]. Asada et al. experimented with an attention CNN [93], and Yi et al. proposed an RNN model with multiple attention layers [94]. In both cases, it is a single model with attention mechanism, which allows the decoder to focus on different parts of the source sentence. As a result, it does not require dependency parsing or training multiple models. Both attention CNN and RNN have comparable results, but the CNN model has an advantage in that it can be easily computed in parallel, hence making it faster with recent graphics processing units (GPUs).

For biotopes event extraction, Li et al. employed CNNs and distributed representation [95] while Mehryary et al. used long short-term memory (LSTM) networks to extract complicated relations [96]. Li et al. applied word embedding to extract complete events from biomedical text and achieved results comparable to the state-of-the-art systems [97]. There are also approaches that identify event triggers rather than the complete event [98,99]. Taken together, deep learning models outperform traditional kernel methods or feature-based approaches by 1–5% in f-score. Among various deep learning approaches, CNNs stand out as the most popular model both in terms of computational complexity and performance, while RNNs have achieved continuous progress.

Information retrieval is a task of finding relevant text that satisfies an information need from within a large document collection. While deep learning has not yet achieved the same level of success in this area as seen in others, the recent surge of interest and work suggest that this may be quickly changing. For example, Mohan et al. described a deep learning approach to modeling the relevance of a document’s text to a query, which they applied to the entire biomedical literature [100].

To summarize, deep learning has shown promising results in many biomedical text mining tasks and applications. However, to realize its full potential in this domain, either large amounts of labeled data or technical advancements in current methods coping with limited labeled data are required.

EHR data include substantial amounts of free text, which remains challenging to approach [101]. Often, researchers developing algorithms that perform well on specific tasks must design and implement domain-specific features [102]. These features capture unique aspects of the literature being processed. Deep learning methods are natural feature constructors. In recent work, Chalapathy et al. evaluated the extent to which deep learning methods could be applied on top of generic features for domain-specific concept extraction [103]. They found that performance was in line with, but lower than the best domain-specific method [103]. This raises the possibility that deep learning may impact the field by reducing the researcher time and cost required to develop specific solutions, but it may not always lead to performance increases.

In recent work, Yoon et al. [104] analyzed simple features using deep neural networks and found that the patterns recognized by the algorithms could be re-used across tasks. Their aim was to analyze the free text portions of pathology reports to identify the primary site and laterality of tumors. The only features the authors supplied to the algorithms were unigrams (counts for single words) and bigrams (counts for two-word combinations) in a free text document. They subset the full set of words and word combinations to the 400 most common. The machine learning algorithms that they employed (naïve Bayes, logistic regression, and deep neural networks) all performed relatively similarly on the task of identifying the primary site. However, when the authors evaluated the more challenging task, evaluating the laterality of each tumor, the deep neural network outperformed the other methods. Of particular interest, when the authors first trained a neural network to predict the primary site and then repurposed those features as a component of a secondary neural network trained to predict laterality, the performance was higher than a laterality-trained neural network. This demonstrates how deep learning methods can repurpose features across tasks, improving overall predictions as the field tackles new challenges. The Discussion further reviews this type of transfer learning.

Several authors have created reusable feature sets for medical terminologies using natural language processing and neural embedding models, as popularized by word2vec [105]. Minarro-Giménez et al. [106] applied the word2vec deep learning toolkit to medical corpora and evaluated the efficiency of word2vec in identifying properties of pharmaceuticals based on mid-sized, unstructured medical text corpora without any additional background knowledge. A goal of learning terminologies for different entities in the same vector space is to find relationships between different domains (e.g. drugs and the diseases they treat). It is difficult for us to provide a strong statement on the broad utility of these methods. Manuscripts in this area tend to compare algorithms applied to the same data but lack a comparison against overall best-practices for one or more tasks addressed by these methods. Techniques have been developed for free text medical notes [107], ICD and National Drug Codes [108,109], and claims data [110]. Methods for neural embeddings learned from electronic health records have at least some ability to predict disease-disease associations and implicate genes with a statistical association with a disease [111], but the evaluations performed did not differentiate between simple predictions (i.e. the same disease in different sites of the body) and non-intuitive ones. Jagannatha and Yu [112] further employed a bidirectional LSTM structure to extract adverse drug events from electronic health records, and Lin et al. [113] investigated using CNNs to extract temporal relations. While promising, a lack of rigorous evaluation of the real-world utility of these kinds of features makes current contributions in this area difficult to evaluate. Comparisons need to be performed to examine the true utility against leading approaches (i.e. algorithms and data) as opposed to simply evaluating multiple algorithms on the same potentially limited dataset.

Identifying consistent subgroups of individuals and individual health trajectories from clinical tests is also an active area of research. Approaches inspired by deep learning have been used for both unsupervised feature construction and supervised prediction. Early work by Lasko et al. [114], combined sparse autoencoders and Gaussian processes to distinguish gout from leukemia from uric acid sequences. Later work showed that unsupervised feature construction of many features via denoising autoencoder neural networks could dramatically reduce the number of labeled examples required for subsequent supervised analyses [115]. In addition, it pointed towards features learned during unsupervised training being useful for visualizing and stratifying subgroups of patients within a single disease. In a concurrent large-scale analysis of EHR data from 700,000 patients, Miotto et al. [116] used a deep denoising autoencoder architecture applied to the number and co-occurrence of clinical events to learn a representation of patients (DeepPatient). The model was able to predict disease trajectories within one year with over 90% accuracy, and patient-level predictions were improved by up to 15% when compared to other methods. Choi et al. [117] attempted to model the longitudinal structure of EHRs with an RNN to predict future diagnosis and medication prescriptions on a cohort of 260,000 patients followed for 8 years (Doctor AI). Pham et al. [118] built upon this concept by using an RNN with a LSTM architecture enabling explicit modelling of patient trajectories through the use of memory cells. The method, DeepCare, performed better than shallow models or plain RNN when tested on two independent cohorts for its ability to predict disease progression, intervention recommendation and future risk prediction. Nguyen et al. [119] took a different approach and used word embeddings from EHRs to train a CNN that could detect and pool local clinical motifs to predict unplanned readmission after six months, with performance better than the baseline method (Deepr). Razavian et al. [120] used a set of 18 common lab tests to predict disease onset using both CNN and LSTM architectures and demonstrated an improvement over baseline regression models. However, numerous challenges including data integration (patient demographics, family history, laboratory tests, text-based patient records, image analysis, genomic data) and better handling of streaming temporal data with many features will need to be overcome before we can fully assess the potential of deep learning for this application area.

Still, recent work has also revealed domains in which deep networks have proven superior to traditional methods. Survival analysis models the time leading to an event of interest from a shared starting point, and in the context of EHR data, often associates these events to subject covariates. Exploring this relationship is difficult, however, given that EHR data types are often heterogeneous, covariates are often missing, and conventional approaches require the covariate-event relationship be linear and aligned to a specific starting point [121]. Early approaches, such as the Faraggi-Simon feed-forward network, aimed to relax the linearity assumption, but performance gains were lacking [122]. Katzman et al. in turn developed a deep implementation of the Faraggi-Simon network that, in addition to outperforming Cox regression, was capable of comparing the risk between a given pair of treatments, thus potentially acting as recommender system [123]. To overcome the remaining difficulties, researchers have turned to deep exponential families, a class of latent generative models that are constructed from any type of exponential family distributions [124]. The result was a deep survival analysis model capable of overcoming challenges posed by missing data and heterogeneous data types, while uncovering nonlinear relationships between covariates and failure time. They showed their model more accurately stratified patients as a function of disease risk score compared to the current clinical implementation.

There is a computational cost for these methods, however, when compared to traditional, non-neural network approaches. For the exponential family models, despite their scalability [125], an important question for the investigator is whether he or she is interested in estimates of posterior uncertainty. Given that these models are effectively Bayesian neural networks, much of their utility simplifies to whether a Bayesian approach is warranted for a given increase in computational cost. Moreover, as with all variational methods, future work must continue to explore just how well the posterior distributions are approximated, especially as model complexity increases [126].

A dearth of true labels is perhaps among the biggest obstacles for EHR-based analyses that employ machine learning. Popular deep learning (and other machine learning) methods are often used to tackle classification tasks and thus require ground-truth labels for training. For EHRs this can mean that researchers must hire multiple clinicians to manually read and annotate individual patients’ records through a process called chart review. This allows researchers to assign “true” labels, i.e. those that match our best available knowledge. Depending on the application, sometimes the features constructed by algorithms also need to be manually validated and interpreted by clinicians. This can be time consuming and expensive [127]. Because of these costs, much of this research, including the work cited in this review, skips the process of expert review. Clinicians’ skepticism for research without expert review may greatly dampen their enthusiasm for the work and consequently reduce its impact. To date, even well-resourced large national consortia have been challenged by the task of acquiring enough expert-validated labeled data. For instance, in the eMERGE consortia and PheKB database [128], most samples with expert validation contain only 100 to 300 patients. These datasets are quite small even for simple machine learning algorithms. The challenge is greater for deep learning models with many parameters. While unsupervised and semi-supervised approaches can help with small sample sizes, the field would benefit greatly from large collections of anonymized records in which a substantial number of records have undergone expert review. This challenge is not unique to EHR-based studies. Work on medical images, omics data in applications for which detailed metadata are required, and other applications for which labels are costly to obtain will be hampered as long as abundant curated data are unavailable.

Successful approaches to date in this domain have sidestepped this challenge by making methodological choices that either reduce the need for labeled examples or that use transformations to training data to increase the number of times it can be used before overfitting occurs. For example, the unsupervised and semi-supervised methods that we have discussed reduce the need for labeled examples [115]. The anchor and learn framework [129] uses expert knowledge to identify high-confidence observations from which labels can be inferred. If transformations are available that preserve the meaningful content of the data, the adversarial and augmented training techniques discussed above can reduce overfitting. While these can be easily imagined for certain methods that operate on images, it is more challenging to figure out equivalent transformations for a patient’s clinical test results. Consequently, it may be hard to employ such training examples with other applications. Finally, approaches that transfer features can also help use valuable training data most efficiently. Rajkomar et al. trained a deep neural network using generic images before tuning using only radiology images [58]. Datasets that require many of the same types of features might be used for initial training, before fine tuning takes place with the more sparse biomedical examples. Though the analysis has not yet been attempted, it is possible that analogous strategies may be possible with electronic health records. For example, features learned from the electronic health record for one type of clinical test (e.g. a decrease over time in a lab value) may transfer across phenotypes. Methods to accomplish more with little high-quality labeled data arose in other domains and may also be adapted to this challenge, e.g. data programming [130]. In data programming, noisy automated labeling functions are integrated.

Numerous commentators have described data as the new oil [131,132]. The idea behind this metaphor is that data are available in large quantities, valuable once refined, and this underlying resource will enable a data-driven revolution in how work is done. Contrasting with this perspective, Ratner, Bach, and Ré described labeled training data, instead of data, as “The New New Oil” [133]. In this framing, data are abundant and not a scarce resource. Instead, new approaches to solving problems arise when labeled training data become sufficient to enable them. Based on our review of research on deep learning methods to categorize disease, the latter framing rings true.

We expect improved methods for domains with limited data to play an important role if deep learning is going to transform how we categorize states of human health. We don’t expect that deep learning methods will replace expert review. We expect them to complement expert review by allowing more efficient use of the costly practice of manual annotation.

To construct the types of very large datasets that deep learning methods thrive on, we need robust sharing of large collections of data. This is in part a cultural challenge. We touch on this challenge in the Discussion section. Beyond the cultural hurdles around data sharing, there are also technological and legal hurdles related to sharing individual health records or deep models built from such records. This subsection deals primarily with these challenges.

EHRs are designed chiefly for clinical, administrative and financial purposes, such as patient care, insurance, and billing [134]. Science is at best a tertiary priority, presenting challenges to EHR-based research in general and to deep learning research in particular. Although there is significant work in the literature around EHR data quality and the impact on research [135], we focus on three types of challenges: local bias, wider standards, and legal issues. Note these problems are not restricted to EHRs but can also apply to any large biomedical dataset, e.g. clinical trial data.

Even within the same healthcare system, EHRs can be used differently [136,137]. Individual users have unique documentation and ordering patterns, with different departments and different hospitals having different priorities that code patients and introduce missing data in a non-random fashion [138]. Patient data may be kept across several “silos” within a single health system (e.g. separate nursing documentation, registries, etc.). Even the most basic task of matching patients across systems can be challenging due to data entry issues [139]. The situation is further exacerbated by the ongoing introduction, evolution, and migration of EHR systems, especially where reorganized and acquired healthcare facilities have to merge. Further, even the ostensibly least-biased data type, laboratory measurements, can be biased based by both the healthcare process and patient health state [140]. As a result, EHR data can be less complete and less objective than expected.

In the wider picture, standards for EHRs are numerous and evolving. Proprietary systems, indifferent and scattered use of health information standards, and controlled terminologies makes combining and comparison of data across systems challenging [141]. Further diversity arises from variation in languages, healthcare practices, and demographics. Merging EHRs gathered in different systems (and even under different assumptions) is challenging [142].

Combining or replicating studies across systems thus requires controlling for both the above biases and dealing with mismatching standards. This has the practical effect of reducing cohort size, limiting statistical significance, preventing the detection of weak effects [143], and restricting the number of parameters that can be trained in a model. Further, rule-based algorithms have been popular in EHR-based research, but because these are developed at a single institution and trained with a specific patient population, they do not transfer easily to other healthcare systems [144]. Genetic studies using EHR data are subject to even more bias, as the differences in population ancestry across health centers (e.g. proportion of patients with African or Asian ancestry) can affect algorithm performance. For example, Wiley et al. [145] showed that warfarin dosing algorithms often under-perform in African Americans, illustrating that some of these issues are unresolved even at a treatment best practices level. Lack of standardization also makes it challenging for investigators skilled in deep learning to enter the field, as numerous data processing steps must be performed before algorithms are applied.

Finally, even if data were perfectly consistent and compatible across systems, attempts to share and combine EHR data face considerable legal and ethical barriers. Patient privacy can severely restrict the sharing and use of EHR data [146]. Here again, standards are heterogeneous and evolving, but often EHR data cannot be exported or even accessed directly for research purposes without appropriate consent. In the United States, research use of EHR data is subject both to the Common Rule and the Health Insurance Portability and Accountability Act (HIPAA). Ambiguity in the regulatory language and individual interpretation of these rules can hamper use of EHR data [147]. Once again, this has the effect of making data gathering more laborious and expensive, reducing sample size and study power.

Several technological solutions have been proposed in this direction, allowing access to sensitive data satisfying privacy and legal concerns. Software like DataShield [148] and ViPAR [149], although not EHR-specific, allow querying and combining of datasets and calculation of summary statistics across remote sites by “taking the analysis to the data”. The computation is carried out at the remote site. Conversely, the EH4CR project [141] allows analysis of private data by use of an inter-mediation layer that interprets remote queries across internal formats and datastores and returns the results in a de-identified standard form, thus giving real-time consistent but secure access. Continuous Analysis [150] can allow reproducible computing on private data. Using such techniques, intermediate results can be automatically tracked and shared without sharing the original data. While none of these have been used in deep learning, the potential is there.

Even without sharing data, algorithms trained on confidential patient data may present security risks or accidentally allow for the exposure of individual level patient data. Tramer et al. [151] showed the ability to steal trained models via public application programming interfaces (APIs). Dwork and Roth [152] demonstrate the ability to expose individual level information from accurate answers in a machine learning model. Attackers can use similar attacks to find out if a particular data instance was present in the original training set for the machine learning model [153], in this case, whether a person’s record was present. To protect against these attacks, Simmons et al. [154] developed the ability to perform genome-wide association studies (GWASs) in a differentially private manner, and Abadi et al. [155] show the ability to train deep learning classifiers under the differential privacy framework.

These attacks also present a potential hazard for approaches that aim to generate data. Choi et al. propose generative adversarial neural networks (GANs) as a tool to make sharable EHR data [156], and Esteban et al. [157] showed that recurrent GANs could be used for time series data. However, in both cases the authors did not take steps to protect the model from such attacks. There are approaches to protect models, but they pose their own challenges. Training in a differentially private manner provides a limited guarantee that an algorithm’s output will be equally likely to occur regardless of the participation of any one individual. The limit is determined by parameters which provide a quantification of privacy. Beaulieu-Jones et al. demonstrated the ability to generate data that preserved properties of the SPRINT clinical trial with GANs under the differential privacy framework [158]. Both Beaulieu-Jones et al. and Esteban et al. train models on synthetic data generated under differential privacy and observe performance from a transfer learning evaluation that is only slightly below models trained on the original, real data. Taken together, these results suggest that differentially private GANs may be an attractive way to generate sharable datasets for downstream reanalysis.

Federated learning [159] and secure aggregations [160,161] are complementary approaches that reinforce differential privacy. Both aim to maintain privacy by training deep learning models from decentralized data sources such as personal mobile devices without transferring actual training instances. This is becoming of increasing importance with the rapid growth of mobile health applications. However, the training process in these approaches places constraints on the algorithms used and can make fitting a model substantially more challenging. It can be trivial to train a model without differential privacy, but quite difficult to train one within the differential privacy framework [158]. This problem can be particularly pronounced with small sample sizes.

While none of these problems are insurmountable or restricted to deep learning, they present challenges that cannot be ignored. Technical evolution in EHRs and data standards will doubtless ease—although not solve—the problems of data sharing and merging. More problematic are the privacy issues. Those applying deep learning to the domain should consider the potential of inadvertently disclosing the participants’ identities. Techniques that enable training on data without sharing the raw data may have a part to play. Training within a differential privacy framework may often be warranted.

In April 2016, the European Union adopted new rules regarding the use of personal information, the General Data Protection Regulation [162]. A component of these rules can be summed up by the phrase “right to an explanation”. Those who use machine learning algorithms must be able to explain how a decision was reached. For example, a clinician treating a patient who is aided by a machine learning algorithm may be expected to explain decisions that use the patient’s data. The new rules were designed to target categorization or recommendation systems, which inherently profile individuals. Such systems can do so in ways that are discriminatory and unlawful.

As datasets become larger and more complex, we may begin to identify relationships in data that are important for human health but difficult to understand. The algorithms described in this review and others like them may become highly accurate and useful for various purposes, including within medical practice. However, to discover and avoid discriminatory applications it will be important to consider interpretability alongside accuracy. A number of properties of genomic and healthcare data will make this difficult.

First, research samples are frequently non-representative of the general population of interest; they tend to be disproportionately sick [163], male [164], and European in ancestry [165]. One well-known consequence of these biases in genomics is that penetrance is consistently lower in the general population than would be implied by case-control data, as reviewed in [163]. Moreover, real genetic associations found in one population may not hold in other populations with different patterns of linkage disequilibrium (even when population stratification is explicitly controlled for [166]). As a result, many genomic findings are of limited value for people of non-European ancestry [165] and may even lead to worse treatment outcomes for them. Methods have been developed for mitigating some of these problems in genomic studies [163,166], but it is not clear how easily they can be adapted for deep models that are designed specifically to extract subtle effects from high-dimensional data. For example, differences in the equipment that tended to be used for cases versus controls have led to spurious genetic findings (e.g. Sebastiani et al.’s retraction [167]). In some contexts, it may not be possible to correct for all of these differences to the degree that a deep network is unable to use them. Moreover, the complexity of deep networks makes it difficult to determine when their predictions are likely to be based on such nominally-irrelevant features of the data (called “leakage” in other fields [168]). When we are not careful with our data and models, we may inadvertently say more about the way the data was collected (which may involve a history of unequal access and discrimination) than about anything of scientific or predictive value. This fact can undermine the privacy of patient data [168] or lead to severe discriminatory consequences [169].

There is a small but growing literature on the prevention and mitigation of data leakage [168], as well as a closely-related literature on discriminatory model behavior [170], but it remains difficult to predict when these problems will arise, how to diagnose them, and how to resolve them in practice. There is even disagreement about which kinds of algorithmic outcomes should be considered discriminatory [171]. Despite the difficulties and uncertainties, machine learning practitioners (and particularly those who use deep neural networks, which are challenging to interpret) must remain cognizant of these dangers and make every effort to prevent harm from discriminatory predictions. To reach their potential in this domain, deep learning methods will need to be interpretable (see Discussion). Researchers need to consider the extent to which biases may be learned by the model and whether or not a model is sufficiently interpretable to identify bias. We discuss the challenge of model interpretability more thoroughly in Discussion.

Longitudinal analysis follows a population across time, for example, prospectively from birth or from the onset of particular conditions. In large patient populations, longitudinal analyses such as the Framingham Heart Study [172] and the Avon Longitudinal Study of Parents and Children [173] have yielded important discoveries about the development of disease and the factors contributing to health status. Yet, a common practice in EHR-based research is to take a snapshot at a point in time and convert patient data to a traditional vector for machine learning and statistical analysis. This results in loss of information as timing and order of events can provide insight into a patient’s disease and treatment [174]. Efforts to model sequences of events have shown promise [175] but require exceedingly large patient sizes due to discrete combinatorial bucketing. Lasko et al. [114] used autoencoders on longitudinal sequences of serum uric acid measurements to identify population subtypes. More recently, deep learning has shown promise working with both sequences (CNNs) [176] and the incorporation of past and current state (RNNs, LSTMs) [118]. This may be a particular area of opportunity for deep neural networks. The ability to recognize relevant sequences of events from a large number of trajectories requires powerful and flexible feature construction methods—an area in which deep neural networks excel.

The study of cellular structure and core biological processes—transcription, translation, signaling, metabolism, etc.—in humans and model organisms will greatly impact our understanding of human disease over the long horizon [177]. Predicting how cellular systems respond to environmental perturbations and are altered by genetic variation remain daunting tasks. Deep learning offers new approaches for modeling biological processes and integrating multiple types of omic data [178], which could eventually help predict how these processes are disrupted in disease. Recent work has already advanced our ability to identify and interpret genetic variants, study microbial communities, and predict protein structures, which also relates to the problems discussed in the drug development section. In addition, unsupervised deep learning has enormous potential for discovering novel cellular states from gene expression, fluorescence microscopy, and other types of data that may ultimately prove to be clinically relevant.

Progress has been rapid in genomics and imaging, fields where important tasks are readily adapted to well-established deep learning paradigms. One-dimensional convolutional and recurrent neural networks are well-suited for tasks related to DNA- and RNA-binding proteins, epigenomics, and RNA splicing. Two dimensional CNNs are ideal for segmentation, feature extraction, and classification in fluorescence microscopy images [17]. Other areas, such as cellular signaling, are biologically important but studied less-frequently to date, with some exceptions [179]. This may be a consequence of data limitations or greater challenges in adapting neural network architectures to the available data. Here, we highlight several areas of investigation and assess how deep learning might move these fields forward.

Gene expression technologies characterize the abundance of many thousands of RNA transcripts within a given organism, tissue, or cell. This characterization can represent the underlying state of the given system and can be used to study heterogeneity across samples as well as how the system reacts to perturbation. While gene expression measurements were traditionally made by quantitative polymerase chain reaction (qPCR), low-throughput fluorescence-based methods, and microarray technologies, the field has shifted in recent years to primarily performing RNA sequencing (RNA-seq) to catalog whole transcriptomes. As RNA-seq continues to fall in price and rise in throughput, sample sizes will increase and training deep models to study gene expression will become even more useful.

Already several deep learning approaches have been applied to gene expression data with varying aims. For instance, many researchers have applied unsupervised deep learning models to extract meaningful representations of gene modules or sample clusters. Denoising autoencoders have been used to cluster yeast expression microarrays into known modules representing cell cycle processes [180] and to stratify yeast strains based on chemical and mutational perturbations [181]. Shallow (one hidden layer) denoising autoencoders have also been fruitful in extracting biological insight from thousands of Pseudomonas aeruginosa experiments [182,183] and in aggregating features relevant to specific breast cancer subtypes [26]. These unsupervised approaches applied to gene expression data are powerful methods for identifying gene signatures that may otherwise be overlooked. An additional benefit of unsupervised approaches is that ground truth labels, which are often difficult to acquire or are incorrect, are nonessential. However, the genes that have been aggregated into features must be interpreted carefully. Attributing each node to a single specific biological function risks over-interpreting models. Batch effects could cause models to discover non-biological features, and downstream analyses should take this into consideration.

Deep learning approaches are also being applied to gene expression prediction tasks. For example, a deep neural network with three hidden layers outperformed linear regression in inferring the expression of over 20,000 target genes based on a representative, well-connected set of about 1,000 landmark genes [184]. However, while the deep learning model outperformed existing algorithms in nearly every scenario, the model still displayed poor performance. The paper was also limited by computational bottlenecks that required data to be split randomly into two distinct models and trained separately. It is unclear how much performance would have increased if not for computational restrictions.

Epigenomic data, combined with deep learning, may have sufficient explanatory power to infer gene expression. For instance, the DeepChrome CNN [185] improved prediction accuracy of high or low gene expression from histone modifications over existing methods. AttentiveChrome [186] added a deep attention model to further enhance DeepChrome. Deep learning can also integrate different data types. For example, Liang et al. combined RBMs to integrate gene expression, DNA methylation, and miRNA data to define ovarian cancer subtypes [187]. While these approaches are promising, many convert gene expression measurements to categorical or binary variables, thus ablating many complex gene expression signatures present in intermediate and relative numbers.

Deep learning applied to gene expression data is still in its infancy, but the future is bright. Many previously untestable hypotheses can now be interrogated as deep learning enables analysis of increasing amounts of data generated by new technologies. For example, the effects of cellular heterogeneity on basic biology and disease etiology can now be explored by single-cell RNA-seq and high-throughput fluorescence-based imaging, techniques we discuss below that will benefit immensely from deep learning approaches.